The New Attack Vector: When AI Becomes the Vulnerability

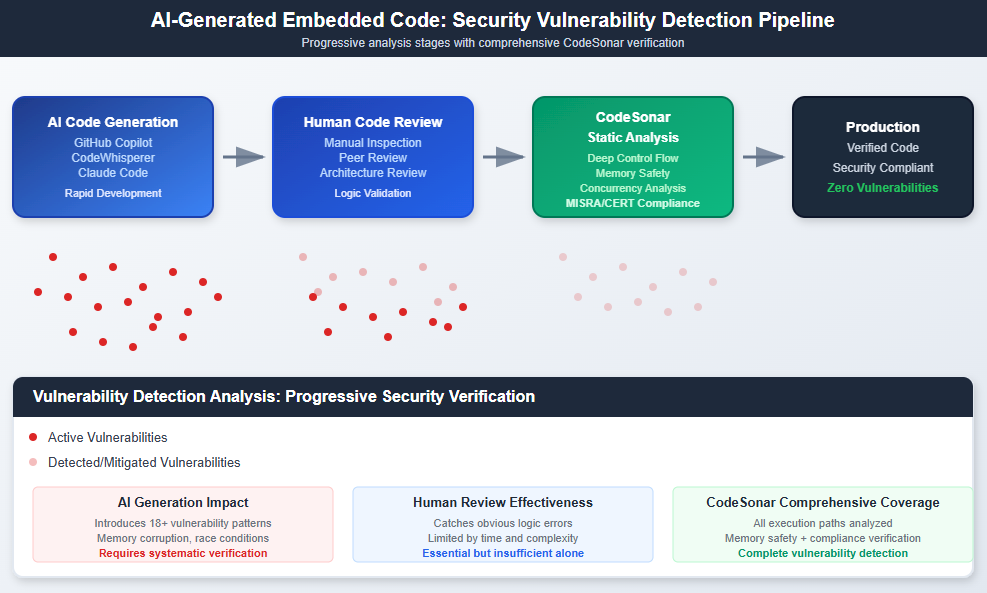

Bottom Line Up Front: AI-generated embedded code introduces novel security threats that traditional development processes weren’t designed to catch. CodeSonar’s deep analysis engine provides a comprehensive defense against these emerging vulnerabilities in safety-critical systems.

The embedded systems landscape is experiencing a seismic shift. Development teams are increasingly relying on AI coding assistants to generate complex low-level code or to fix coding standard violations (i.e. MISRA violations) for medical devices, automotive systems, and industrial controllers. While productivity gains are undeniable, each AI-generated function potentially introduces security vulnerabilities that could compromise entire systems.

Static analysis has evolved from a quality assurance tool to a critical security control for AI-assisted development workflows.

The Embedded Systems AI Paradox: Speed vs. Security

Where Traditional Code Review Fails

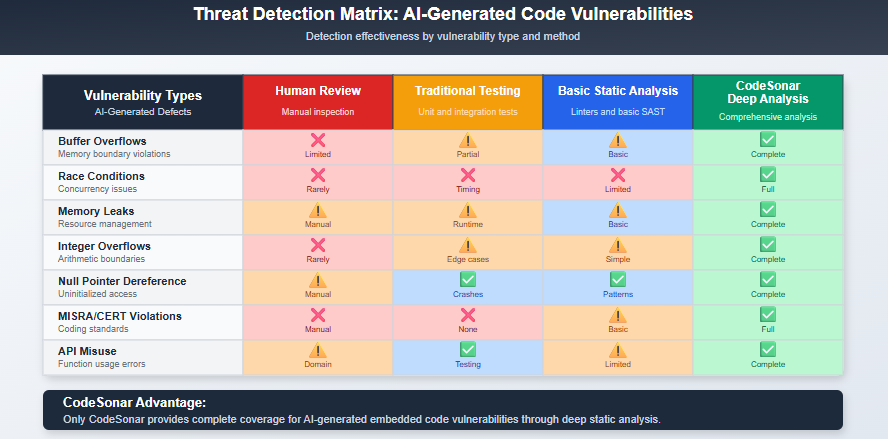

Human code reviewers excel at catching obvious logic errors and style violations. However, AI-generated code presents unique challenges that overwhelm traditional review processes:

Semantic correctness without security awareness. AI models generate syntactically perfect code that compiles without warnings yet contains subtle vulnerabilities. Memory allocation patterns look reasonable but introduce use-after-free conditions. Interrupt handlers appear properly structured but create race conditions under specific timing scenarios. Error handling presents another critical gap, AI-generated code often implements new functionality without proper error checking, creating pathways for unexpected runtime failures that can cascade through safety-critical systems.

Scale beyond human capacity. AI tools can generate thousands of lines of embedded C/C++ code in minutes. No human reviewer can maintain the attention to detail required to catch memory safety violations across such volumes of code.

The Resource-Constrained Environment Challenge

Embedded systems operate under constraints that AI models don’t fully understand:

- Limited memory spaces where buffer overflows have immediate hardware impact

- Real-time requirements where concurrency bugs cause system failures

- Direct hardware register access where pointer errors can damage peripherals

- Power constraints where inefficient code patterns drain batteries

CodeSonar’s Multi-Language Defense Against AI Vulnerabilities

Beyond Surface-Level Analysis

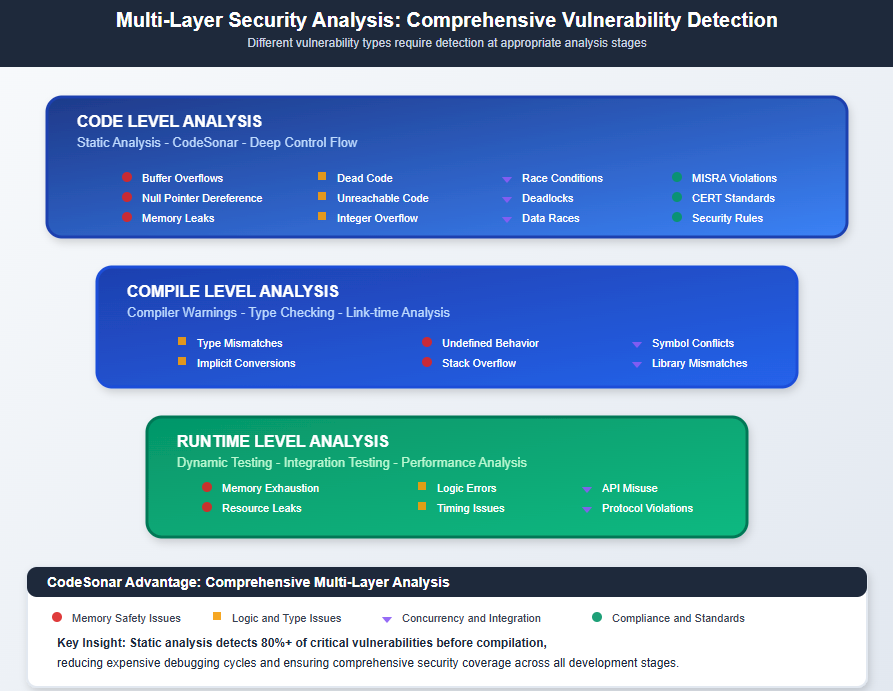

CodeSonar’s abstract execution engine provides the deep program understanding that AI vulnerability detection requires. Unlike basic linters that check syntax patterns, CodeSonar builds a complete representation of program behavior across all execution paths.

Advanced memory tracking follows data flow through complex pointer arithmetic that AI often generates incorrectly. CodeSonar detects buffer overruns, null pointer dereferences, and memory leaks that human reviewers miss in AI-generated code.

Cross-functional analysis examines interactions between AI-generated functions and existing codebase components. This catches integration vulnerabilities where individually correct functions create security holes when combined.

Multi-Language Coverage for Modern AI Development

AI coding tools generate code in multiple languages within single embedded projects. CodeSonar supports this reality with comprehensive analysis across:

- C/C++/C# for core embedded functionality and device drivers

- JavaScript for embedded web interfaces and configuration tools

Each language analysis engine understands AI-specific vulnerability patterns, from memory safety issues in C to injection vulnerabilities in dynamic languages.

Real-World Threat Scenarios: When AI Code Goes Wrong

Medical Device Memory Corruption

An AI assistant generates insulin pump dosage calculation code with a subtle integer overflow vulnerability. During clinical testing, the overflow condition never triggers. Six months post-deployment, a specific patient glucose pattern causes memory corruption, leading to incorrect dosage delivery.

CodeSonar Detection: Integer overflow analysis catches the boundary condition during static analysis, preventing field deployment of vulnerable code. Regulatory Impact: FDA’s evolving AI/ML guidance emphasizes pre-market verification of AI-assisted medical software development—static analysis provides the documented verification trail required.

Automotive CAN Bus Exploitation

AI-generated CAN message parsing code contains a buffer overflow in the message length validation. The vulnerability only triggers with malformed packets that exceed standard CAN frame sizes. An attacker crafts specific CAN messages that exploit this overflow to gain control of vehicle systems.

CodeSonar Detection: Buffer analysis traces data flow through the parsing function, identifying the overflow condition before integration testing begins. ISO 21434 Alignment: Cybersecurity risk assessment requirements mandate identification of potential attack vectors—static analysis systematically identifies vulnerabilities that could enable cyber attacks.

Industrial Control System Race Conditions

An AI coding tool generates interrupt handler code for a chemical processing control system. The code appears correct but contains a race condition between temperature sensor readings and safety shutdown logic. Under specific timing conditions, the race condition prevents emergency shutdowns.

CodeSonar Detection: Concurrency analysis models interrupt behavior and identifies the race condition that could bypass safety controls. NIST AI RMF Application: Risk management requires technical measures to identify AI system failures—static analysis provides the systematic verification needed.

Implementing CodeSonar for AI-Assisted Development

Pre-Commit Verification Gates

Integration with development workflows ensures AI-generated code undergoes comprehensive analysis before entering version control:

IDE Integration streamlines the analysis workflow as developers review and modify AI suggestions. CodeSonar plugins allow developers to quickly analyze code and identify potential vulnerabilities, enabling them to request alternative implementations from AI tools when issues are detected.

CI/CD Pipeline Integration blocks commits containing high-severity vulnerabilities. Automated analysis runs on every AI-generated code block, creating a verification trail for regulatory compliance.

Compliance Documentation for AI-Generated Code

Regulatory auditors increasingly question the safety and security of AI-assisted development processes. CodeSonar provides the documented verification trail that standards like ISO 26262 and DO-178C require.

MISRA C/C++ Compliance ensures AI-generated code meets automotive and aerospace safety standards. CodeSonar’s built-in rule sets catch deviations that AI tools commonly introduce. As AI coding assistants become more sophisticated at generating RTOS drivers and real-time control algorithms, compliance verification becomes even more critical.

CERT Secure Coding Standards verification protects against the most dangerous vulnerability classes that affect embedded systems. CodeSonar identifies and reports CERT rule violations across the entire codebase.

Custom Rule Development allows organizations to codify their specific security requirements for AI-generated code. Teams can create rules that flag AI-specific anti-patterns based on their experience with different coding assistants.

Regulatory Risk Mapping:

Memory Corruption in Safety Functions

- Regulatory Standard: ISO 26262 ASIL-D

- CodeSonar Mitigation: Buffer overflow detection, pointer analysis

Cybersecurity Vulnerabilities

- Regulatory Standard: ISO 21434

- CodeSonar Mitigation: CERT rule compliance, taint analysis

AI-Assisted Medical Device Software

- Regulatory Standard: FDA AI/ML Guidance

- CodeSonar Mitigation: Documented verification trail, custom rules

Software Assurance for Critical Systems

- Regulatory Standard: DO-178C

- CodeSonar Mitigation: Control flow analysis, dead code detection

Measuring Success: AI Security Metrics That Matter

Vulnerability Density Tracking

Organizations implementing CodeSonar for AI-generated code typically measure:

AI vs. Human-Written Code Quality through defect density comparisons. Teams track vulnerabilities per thousand lines of code, segmented by generation method.

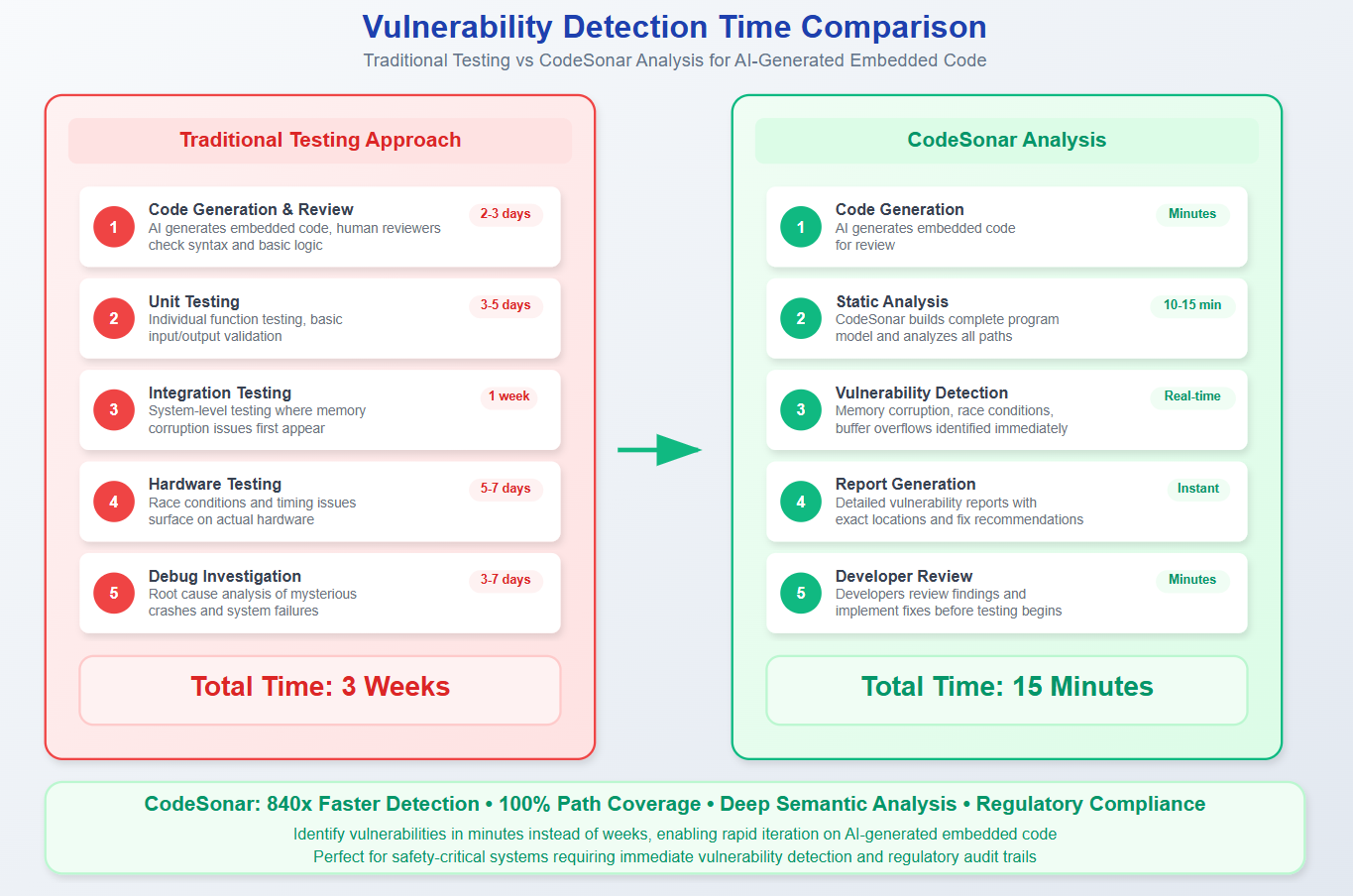

Time-to-Detection for different vulnerability categories. Static analysis catches memory safety issues within minutes of code generation, while traditional testing might require weeks to surface the same problems.

False Positive Rates specific to AI-generated code patterns. CodeSonar’s analysis engine learns from AI coding patterns, reducing noise while maintaining high detection accuracy.

Development Velocity Impact

Contrary to common assumptions, comprehensive static analysis actually accelerates AI-assisted development:

Reduced Debug Cycles as vulnerabilities are caught before integration testing. Teams spend less time troubleshooting mysterious system crashes caused by memory corruption. What previously required weeks of debugging now surfaces in minutes during code analysis.

Faster Code Review as human reviewers focus on business logic rather than hunting for security vulnerabilities. CodeSonar handles the systematic security analysis, freeing experts for higher-value architectural decisions.

Regulatory Approval Acceleration through automated compliance verification. Documentation generated by CodeSonar analysis streamlines safety certification processes.

As AI coding assistants evolve to generate increasingly complex embedded functionality—from cryptographic implementations to real-time kernel modifications—the risk-to-velocity ratio will only worsen without comprehensive static analysis. Organizations implementing CodeSonar now are positioning themselves for this inevitable expansion of AI capabilities into safety-critical code domains.

The Path Forward: Building AI-Resilient Development Processes

Immediate Implementation Steps

Organizations concerned about AI-generated code security should prioritize:

Pilot Project Selection on non-critical systems to establish baseline measurements and team familiarity. Choose projects with significant AI code generation but limited safety impact.

Tool Integration Planning to minimize workflow disruption. CodeSonar’s extensive IDE and CI/CD integrations allow gradual rollout without forcing process changes.

Team Training Focus on interpreting static analysis results for AI-generated code. Developers need to understand how AI-specific vulnerabilities differ from traditional coding errors.

Long-Term Strategic Considerations

AI Tool Diversity Management as teams adopt multiple coding assistants. CodeSonar provides consistent security analysis regardless of which AI tool generated the code. As the next generation of AI assistants begins targeting specialized embedded domains—generating cryptographic routines, real-time schedulers, and hardware abstraction layers—comprehensive static analysis becomes the only scalable verification approach.

Regulatory Landscape Evolution as standards bodies address AI-assisted development. Organizations with comprehensive static analysis programs will be better positioned for evolving compliance requirements. The NIST AI Risk Management Framework already emphasizes technical measures for AI system verification, while FDA guidance increasingly scrutinizes AI-assisted medical device development processes.

Threat Model Updates to account for AI-specific attack vectors. Security teams must consider how adversaries might exploit AI-generated code patterns that differ from human coding habits. Static analysis provides the systematic coverage needed to identify these novel vulnerability patterns as they emerge.

Recommended Visual: Evolution timeline showing AI coding assistant capabilities expanding from simple functions to safety-critical system code, with CodeSonar as the consistent verification layer

Conclusion: Static Analysis as AI Security Infrastructure

The integration of AI coding tools into embedded development is irreversible. Organizations that recognize this reality and implement comprehensive static analysis now will capture productivity benefits while avoiding the security pitfalls that will trap slower-moving competitors.

CodeSonar provides the technical foundation for secure AI-assisted embedded development. Its deep analysis capabilities, multi-language support, and regulatory compliance features make it the essential infrastructure for teams serious about harnessing AI while maintaining safety and security standards.

The question isn’t whether AI will transform embedded development—it’s whether your security controls will keep pace with the transformation.

Frequently Asked Questions

Q: How can static analysis catch AI-generated vulnerabilities that human experts miss?

A: Human code reviewers excel at understanding business logic and architectural decisions, but they struggle with systematic vulnerability detection across large volumes of AI-generated code. CodeSonar builds a complete abstract representation of program behavior, tracing data flow through complex execution paths that would take human reviewers hours to analyze manually.

The tool examines every possible code path simultaneously, identifying subtle interactions between functions that create vulnerabilities. AI-generated code often appears syntactically correct while containing semantic errors that only surface under specific conditions—exactly the type of issue that comprehensive static analysis excels at finding.

Q: Won’t integrating static analysis slow down our AI-accelerated development cycles?

A: Static analysis actually accelerates development by catching issues before they enter expensive debugging cycles. Traditional approaches to finding memory corruption or concurrency issues in embedded systems can take weeks of integration testing and hardware debugging.

CodeSonar analysis completes in minutes, providing immediate feedback on AI-generated code quality. Teams report spending significantly less time troubleshooting mysterious system crashes and more time on feature development and system optimization.

Q: Our embedded developers are already resistant to AI tools—how do we add another layer of complexity?

A: Position static analysis as developer empowerment rather than additional oversight. Many embedded developers resist AI tools because they don’t trust the generated code quality. CodeSonar provides the verification layer that gives developers confidence in AI suggestions.

Rather than requiring developers to manually audit every AI-generated function for memory safety issues, static analysis handles the systematic verification automatically. This allows experienced developers to focus on hardware interfaces, system architecture, and performance optimization—the areas where their expertise provides the most value.

Q: How do we handle regulatory audits when we can’t fully explain how AI generated our code?

A: Regulatory auditors care about verification processes, not code generation methods. Static analysis provides documented evidence that code meets safety and security requirements regardless of how it was written. CodeSonar generates audit trails showing compliance with MISRA C, CERT standards, and custom organizational requirements.

The verification documentation demonstrates systematic analysis of potential failure modes, which is exactly what regulatory frameworks like ISO 26262 and DO-178C require. Some auditors actually prefer AI-assisted development with comprehensive static analysis because the verification process is more systematic than traditional manual code review.

Q: As AI coding tools improve, won’t they eventually eliminate the need for static analysis?

A: AI models will continue improving at generating syntactically correct code, but they face fundamental limitations in understanding the semantic requirements of safety-critical embedded systems. Memory management, real-time constraints, and hardware interface requirements involve domain-specific knowledge that extends beyond pattern matching in training data.

Even as AI tools become more sophisticated, embedded systems are simultaneously becoming more complex. The attack surface expands as AI generates code for cryptographic implementations, real-time schedulers, and safety-critical control algorithms. Comprehensive verification becomes more important, not less, as AI capabilities expand into these domains.

Q: What happens if our AI coding assistant becomes unavailable or changes its model?

A: Static analysis provides consistent verification regardless of code generation method. Whether your team uses GitHub Copilot, CodeWhisperer, Claude Code, or writes code manually, CodeSonar applies the same comprehensive security analysis.

Organizations with established static analysis workflows can adapt to different AI tools or return to traditional development methods without losing their verification capabilities. The analysis rules and compliance requirements remain constant even as code generation methods evolve.

Next Steps: Contact CodeSecure to discuss implementing comprehensive static analysis for your AI-assisted embedded development workflows. Your systems are too critical to leave AI-generated code unchecked.

Book a Demo